Prioritizing magnetic resonance (MR) radiology functions for virtual operations: a feasibility study

Introduction

Magnetic resonance imaging (MRI) is an important diagnostic scanning tool for the detection and monitoring of specific diseases and conditions. However, equipment, operations, maintenance, and personnel (e.g., MR technologists) make the diagnostic tool expensive so improving workflow efficiency is valuable. Traditionally, telemedicine in radiology focuses on the electronic transmission of images and radiological reports for image interpretations (1,2). However, the processes in the MRI workflow can be improved as advances in computer and communications technology enable users better tools for the comprehensive exchange of information in real time. This research studies improvements in MR imaging processes using expert technologists to alleviate workflow constrictions. In addition, image acquisition tasks, that may be performed in a virtual environment, are also identified and the efficiencies of acquiring MRI exams evaluated. In this paper, virtual indicates work that may be performed in a remote operating center by a group of highly skilled technologists. We present the following article in accordance with the SQUIRE 2.0 reporting checklist (available at https://jhmhp.amegroups.com/article/view/10.21037/jhmhp-21-92/rc).

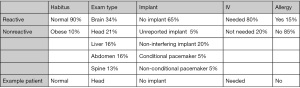

The hierarchical structure of the MR imaging functions is shown at the highest level in Figure 1 and is depicted using an SADT (Structured Analysis and Design Technique) format (3). The functions given in this section are the foundational elements of the modeling that is discussed in the next section. The first function, Review Schedule, occurs up to 3 weeks in advance of an appointment. The function reviews the exam parameters for exam code, exam protocol and sedation/anesthesia orders and then the patient’s record for allergies, body habitus, mobility needs, implants and other special needs. The Prep Patient function (A02) is performed by both technologists and medical assistants. The function begins with confirming arrival and patient identification in the electronic health record (EHR) then clearing implants that were not reported at the time the appointment was made, assigning lockers for patient changing, starting an IV or other port access, handling special needs (i.e., pacemaker, claustrophobia) and conducting final verification before walking the patient to the scanning room. The Release Patient function (A04) begins when the patient leaves the scanning room and ends after the patient changes, receives post-exam instructions and has any ports removed.

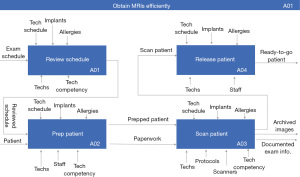

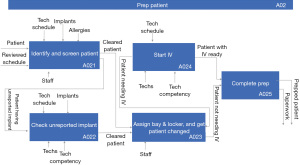

Figure 2 and Figure 3 show the next level of functional decomposition which involves all activities that may affect image quality for the Prep Patient (A02) and the Scan Patient (A03) functions, respectively. Each top-level function, A01–A04, shown in Figure 1 was decomposed two additional levels to fully capture the MR workflow; however, Prep Patient (A02) and Scan Patient (A03) functions are only given here because they are most relevant to the discussion herein. The Prep Patient function starts with identifying and screening the patient which involves asking about the patient’s past medical treatments and procedures that may pose risks to patient safety during the MR exam (including but not limited to implants, surgical incisions, etc.). If an implant that is not reported in the patient’s record surfaces in the screening process, the details about the implant will need to be obtained and addressed by a knowledgeable technologist to have it cleared; otherwise, the patient will be assigned a bay and a locker and change into the proper attire. If the patient needs an IV as suggested by the exam code, then after changing, an IV will be started. Prep Room includes preparing the coils and positioning devices as well as performing a last safety screening. Proper positioning of the patient ensures good images as well as patient safety and comfort. While the Review Schedule function has already occurred, there may be last-minute updates, so the order is reviewed and the protocol is selected from the MR scanner. The protocol may be updated with additional sequences at that point to ensure that the anatomy is fully scanned. As the protocol sequences are run in the Acquire Image function (A034), the technologist is continually monitoring the quality of the images. Some images, such as spectroscopy and angiograms, require post-processing before being sent to the picture archiving and communication system (PACS). Finally, exam details for billing are entered while the patient is escorted back to the changing bay and released.

After a baseline simulation model that represents the traditional MR workflow is developed and validated, two expert-usage scenarios are analyzed. Turn-around time (TAT) and wait time (WT) associated with the two expert-usage scenarios decrease across all modeled MR functions and patient attributes when compared to the baseline model. These findings are extended to explore workflow virtualization for a remote operating center that will not only increase efficiency but has the potential to increase patient access, workforce development and care standardization.

The next section of this paper presents the data sources of this study and explains the methods used to model traditional MR workflow and technologist expertise, the analyses that are conducted, and validation of the baseline model. The “Results” section describes the results of the baseline model and the expert-usage scenarios. An example is given which shows the benefits of using experts. Results of statistical analysis of different scenarios are also given in this section. The last section discusses the results and implications they have.

Methods

Opportunities for virtual operations reside with tasks that are most demanding in the MR workflow. A cognitive task analysis (CTA) is conducted to identify those demanding MR activities. Based on the findings of the CTA, a discrete-event simulation (DES) model is developed and used to explore different expert-usage scenarios.

Data sources

The data used in this study come from two sources: observations and scanner log files. Over 180 hours of observation were spent in the MR operations area conducting the CTA, characterizing the operations for functional decomposition, and gathering metric data for the functional tasks. Logfile data consisting of 68 variables on about 114K exams is utilized for scan time duration by anatomy, number of exams by exam type, and patient characteristics (i.e., age, weight, gender). This study focused on the 7 anatomies that represent >95% of all out-patient diagnostic scans (Table 1). The scanner log file data in Table 1 shows that scanner time mean and variability differ by anatomy so the anatomy of the patient being scanned needs to be accounted for. For simplicity, only the top five anatomies which still account for >80% of all out-patient diagnostic scans are modeled.

Table 1

| Brain | Head | Liver | Abdomen | Spine | Knee | Heart | Other (arm, heart, prostate, etc.) | |

|---|---|---|---|---|---|---|---|---|

| Mean | 39.1 | 34.81 | 37.4 | 40.05 | 44.72 | 35.59 | 75.20 | – |

| SD | 15.7 | 12.53 | 14.85 | 15.29 | 23.22 | 15.5 | 23.01 | – |

| % of all exams | 28% | 18% | 13% | 13% | 11% | 5.9% | 5.9% | 5.2% |

SD, standard deviation.

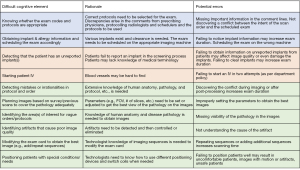

CTA: identification of demanding MR activities

Many of the functions described in Figures 1-3 are complex and the durations of the functions will be impacted by the experience of the technologist. As such, the MR workflow first needs to be characterized from a cognitive perspective as opposed to behavioral-based task analysis. Applied cognitive task analysis (ACTA) is used to elicit and capture difficult cognitive elements, rationale for their difficulty and potential errors (4-6). Figure 4 presents selected findings from the CTA that are considered “complicated” from a technologist’s perspective, and these findings identify the need for capturing and modeling expertise (as discussed in detail in the subsequent section). The challenging cognitive elements are synthesized, associated with MR functions and represent expert activity in the simulation model of the workflow. Expert technologists are utilized in all functions except Release Patient (A04).

Modeling expert knowledge

For a given task, a technologist’s completion time reflects a relative experience level. As expertise accrues, the time technologists spend on MR operational tasks tends to decrease (7). To model expert knowledge, task times are randomly sampled from designated probability distributions. Expert task time is not documented for any tasks in the MR workflow, so the triangular distribution as a subjective description of the population is used.

Two different classes of task time distributions are used in this study: the expert technologist task time distribution (“expert distribution” for short) and the average technologist task time distribution (“average distribution” for short). For any task, experts tend to have a smaller average task time and a smaller variability. It should be noted that historical data is not available for the expert distributions. In order to estimate these, the mode and the upper limit of the respective average distributions are decreased by a specific amount (assuming the lower limit stays the same). The percentage of the decrease is determined by findings in Weinger & Slagle (7) and verified by SMEs.

Discrete-event simulation (DES) model

DES has long been used to model health care systems (8-12). A DES model is developed and implemented using the SimPy package in Python (Version 3.8.3) (13,14). The key output metrics include TAT and WT. TAT is defined as the time from when a patient begins the identity confirmation and safety screening function (A021) to when the patient is returned to the changing area (A04). WT is the time that the patient is waiting for a resource such as a technologist, medical assistant or scanner; WT is included in the TAT.

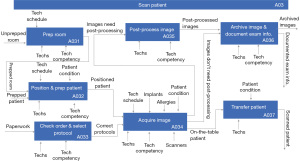

For each simulation run, a pool of 500 patients is generated, and each patient is assigned five attributes: (I) habitus (normal, obese); (II) exam type (brain, head, liver, spine, abdomen); (III) implant (no implant, unreported implant, non-interfering implant, conditional pacemaker, non-conditional pacemaker); (IV) IV (needed, not needed); (V) allergy (yes, no). The frequency of occurrence of each attribute is determined by observation, log-file analysis and subject matter experts. These 5 anatomies occur most frequently and comprise approximately 80% of the MR exams. Figure 5 shows how a simulated patient is represented and stored as a vector in the patient pool. The last row is an example patient who has a normal habitus, is having a scan related to the head, has no implants that need to be considered during the exam, needs an IV for contrast administration and has no known allergy (e.g., contrast reaction and/or claustrophobia). While claustrophobia is not an allergy, this field identifies conditions that will impact the scan; if a patient is claustrophobic, additional time (sampled from a Gaussian distribution) is added to task duration.

The patients in the pool are then “released” into the simulated MRI unit and move through each function (i.e., task) in the MR workflow sequentially. In the model, patients arrive at a fixed interval of 15 minutes. The simulation model contains 12 functions in total which are decomposed from the highest level functions shown in Figure 1. The functions include the four sub-functions (A021–A024) of Prep Patient, the seven sub-functions (A031–A037) of Scan Patient, and the function of Release Patient (A04). The model tracks state changes of the individual patient as well as other entities in the simulation model (e.g., technologists, scanners etc.). The simulation terminates when the last patient finishes their exam and is released. Functions, for example, that are not in the scope of the models include the functions related to scheduling, consent and safety screening, insurance pre-authorization, contrast administration and scanner exam assignment.

Baseline model validation

The baseline simulation model is validated against MR inpatient/ED TAT data collected between March 2019 and February 2020 at the academic facility where the study is conducted. Since inpatients and ED patients will usually have been prepped (i.e., implants identified, attire changed, IV started) by the time they show up at the MR unit, the data only includes time from when they enter the scanner room to the time they leave the room; this process is captured by the Scan Patient (A03) function in the simulation model, thus the simulated task time for Scan Patient is compared to the historical data for the purpose of model validation.

The data used for model validation requires elimination of duplicate records, infeasible exam records and concatenation of exam cards. Errors are also observed in the data: exams that have durations that are too short/long to be correct. For example, brain stroke scans (MRI BRAIN STROKE) that have a duration of 0 minutes and brain scans (MRI BRAIN WO/W CONT) that have a duration of 827 minutes are incorrect. To correct this, exams were grouped by exam code and then exams with durations that are below the 10th percentile or above the 90th percentile in the category are removed from the data. The validation data consists of 103 different exam codes; the majority of which occur infrequently. The top 14 most frequently occurring exam codes were retained and these represent approximately 80% of the total cases. Average durations by exam code are calculated, and the average duration across different exam codes are obtained as the weighted average.

Prior to cleaning, 2,931 exams comprise the data; after cleaning, 1,618 exams remain (446 exams were excluded due to duplication, 474 exams were excluded because the exam codes were not in this study, 393 exams were excluded due to unreasonable durations). The mean duration for the scan-patient function is 41.36 minutes and the mean duration for the simulation model scan-patient function is 46.70 minutes. The (µ+1σ) intervals on mean duration from historical inpatient data and the mean duration of scan patient function of the simulation model are (28.91, 53.81) and (37.04, 56.36), respectively. The difference is likely to be caused by a small difference in the types of exams between inpatients and outpatients. Taking these factors into consideration, the subject matter experts conclude that the simulation model output is in accordance with the historical data, and the simulation model is validated.

Analyses

Based on the validated baseline model and findings of the CTA, we explore opportunities for formalizing expert usage in the MR workflow as a precursor to workflow virtualization for remote operations and evaluate the impact of such expert usage on system metrics such as TAT and WT. Specifically, two expert-usage scenarios are identified and examined, whose performance is then compared to the baseline model given in the “Results” section which represents the traditional MR workflow. Table 2 shows the functions that may require expert help as a result of the CTA and thus have both an average distribution and an expert distribution. Weinger & Slagle (7) found that for a specific task, expert anesthesiologists may spend up to 50% less time than novice anesthesiologists. Since the MR technologists observed were not complete novices, a task time reduction well below 50% is assumed. Specifically, the mode is decreased by 10% and the upper limit by 20% in an expert distribution, compared to those of the average distribution. The values in Table 2 are used in modeling each scenario.

Table 2

| Function | Average distribution | Expert distribution | |||||

|---|---|---|---|---|---|---|---|

| Distribution parameters (minutes) | Mean | Std. Dev. | Distribution parameters (minutes) | Mean | Std. Dev. | ||

| Check unreported implant (A022) | (5, 7, 30) | 14.00 | 5.67 | (5, 6.3, 24) | 11.77 | 4.33 | |

| Start IV (A024) | (3, 7, 20) | 10.00 | 3.63 | (3, 4.5, 16) | 7.83 | 2.90 | |

| Prep room (A031) | (2, 3, 4.5) | 3.17 | 0.51 | (2, 2.7, 3.6) | 2.77 | 0.33 | |

| Position & prep patient (A032) | (2.1, 3.9, 5.2) | 3.73 | 0.64 | (2.1, 3.5, 4.2) | 3.27 | 0.44 | |

| Check order & select protocol (A033) | (0.5, 0.8, 1.3) | 0.87 | 0.16 | (0.5, 0.7, 1) | 0.73 | 0.10 | |

| Acquire image (A034) | Brain: (20, 35, 40); Head: (23, 35, 48); Liver: (30, 40, 55); Spine: (22, 45, 67); Abdm: (25, 40, 55) |

Brain: 31.67; Head: 35.33; Liver: 41.67; Spine: 44.67; Abdm: 40.00 |

Brain: 4.25; Head: 5.11; Liver: 5.14; Spine: 9.19; Abdm: 6.12 |

Brain: (20, 31.5, 32); Head: (23, 31.5, 38.5); Liver: (30, 36, 44); Spine: (20, 40.5, 60.3); Abdm: (25, 36, 44) |

Brain: 27.83; Head: 31.00; Liver: 36.67; Spine: 40.27; Abdm: 35.00 |

Brain: 2.77; Head: 3.17; Liver: 2.87; Spine: 8.23; Abdm: 3.89 |

|

| Post-process image (A035) | (4.5, 6, 8) | 6.17 | 0.72 | (4.5, 5.4, 6.4) | 5.43 | 0.39 | |

MR, magnetic resonance; Std. Dev., standard deviation; Abdm, abdomen.

The first scenario studied is an All-Expert-Onsite scenario, where all tasks in the workflow that would benefit from increased capability are performed onsite by expert personnel only. Such tasks include check unreported implant (A022), start IV (A024), prep room (A031), position and prep patient (A032), check order and select protocol (A033), acquire image (A034), and post-process image (A035). In this model all the task durations are randomly drawn from only the expert distributions shown in Table 2. This scenario effectively represents a bound for system performance; that is, it represents the shortest potential TAT and WT.

The second scenario studied is a Remote-Technologist scenario, which moves towards virtualization by offloading the non-patient-facing tasks to a remote location staffed exclusively by experts. Only non-patient-facing tasks are considered for offloading because although expert help may be needed for some tasks, they cannot be effectively assisted in a virtual environment. For example, Start IV is a function where expert help may be needed because some patients have blood vessels that are hard to find. Policy states that the same person shall not stick a patient more than two times without success; therefore, in cases of a difficult IV, an expert technologist or nurse may be called in for help. However, this is not a task that can be virtualized, since it requires face-to-face interaction with patients. Functions that do not require direct contact with patients include check unreported implant (A022), check order and select protocol (A033), acquire image (A034) and post-process image (A035) and are opportunities for virtualization. The remaining functions in Table 2 require direct contact with patients or have to be performed locally. This scenario represents a situation where a local site could be administered by medical assistants and/or nurses, and a remote site could be structured to support multiple local sites.

Statistical analysis

All statistical analyses were done in the R language using RStudio (Version 1.1.456).

Results

Baseline model result

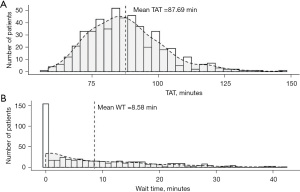

Figure 6A shows the distribution of TATs for 500 simulated patients from one simulation run; Figure 6B shows the distribution of WTs for the same 500 simulated patients. The baseline model is replicated 30 times and the mean TAT and mean WT over the 30 simulation runs are shown in Table 3.

Table 3

| Mean TAT (minutes) | Mean WT (minutes) | |

|---|---|---|

| Baseline model | 87.69 (1.45) | 8.58 (1.27) |

The standard deviation of the mean is given in ().TAT, turn-around time; WT, wait time.

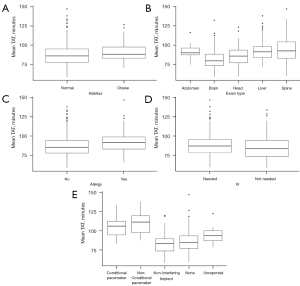

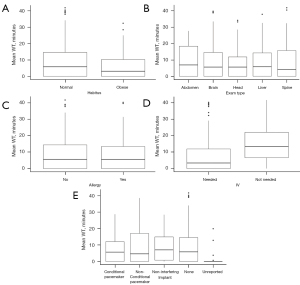

The baseline model provides insights into the patient attributes. Figure 7 shows that exam type (Figure 7B) and implant status (Figure 7E) tend to affect exam duration while patient habitus, patient allergies and IV requirements do not. Exam type and implant status are further explored. Figure 8 shows that patient characteristics do not significantly affect WTs. MR exam durations vary by anatomy but utilizing experts may reduce longer scan times and reduce TAT. In addition, improving implant knowledge may also reduce TAT. Note that each plotted point is the mean TAT from one replication of the baseline model.

Expert-usage scenario result

Each of the expert models is replicated 30 times, and the results are shown in Table 4. Under all scenarios, the use of expert technologists reduces TAT and WT. By simply offloading the non-patient-facing functions to expert technologists in the remote-technologist model, the average TAT per exam is reduced up to 12.54 minutes and the average WT is reduced up to 6.44 minutes when compared to current operations where expert help is provided on an informal basis. Note that the remote-technologist model has a comparatively longer TAT and WT because only the non-patient-facing functions are studied. In other words, this model only utilizes durations from four expert distributions, whereas the all-expert-onsite model uses durations drawn only from the expert distributions.

Table 4

| Models | Mean TAT (min) | Mean WT (min) |

|---|---|---|

| Baseline model | 87.69 (1.45) | 8.58 (1.27) |

| All-expert-onsite model | 71.59 (0.40) | 1.25 (0.20) |

| Remote-technologist model | 75.15 (0.46) | 2.14 (0.28) |

| Example (ABD, SPN, unreported implant) | 83.62 (0.81) | 5.54 (0.65) |

The standard deviation is given in (). TAT, turn-around time; WT, wait time; ABD, abdomen; SPN, spine.

An example

While the all-expert-onsite model represents an idealized situation where all tasks are performed by experts, a more realistic situation is that experts are “tapped” on an informal basis when needed. Moreover, it is assumed in this example that the expert workload comprises both scheduled and on-demand components. The scheduled workload includes exams that are known to be difficult to perform for the less experienced technologists, and the on-demand workload includes impromptu requests for help.

Note that abdomen and spine exams have longer image acquisition durations and/or variability, and are generally perceived as more challenging by MR technologists, Therefore, in this example, when these exams are on the schedule, they are routed to a remote operations center staffed by expert technologists. Since unreported implants require evaluation by an expert technologist, they are also be routed to the remote operations center; this represents an unscheduled or on-demand workload for the technologist. Table 4 (bottom row) shows the metrics for this example: offloading more complex exams and potentially risky implant situations reduces TAT and WT when compared to the current operations (i.e., baseline model).

Comparison

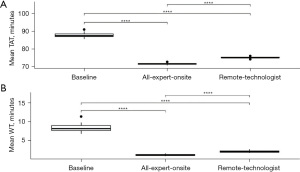

Pairwise t-tests are used to determine if statistically significant differences exist between the mean TAT and mean WT of all three models: the baseline model and the two expert models. The box plots in Figure 9A represent the mean TAT for each model and the stars above the horizontal lines denote the level of significance for each of the 3 pairwise comparisons—all of which are significantly different. Figure 9B plots the same information for mean WT. Again, all pairwise comparisons are significant. The findings suggest that by having experts in the workflow, efficiency gains and reductions in variability can be achieved. What’s more, the higher the expert involvement, the more efficiency gains are realized. While the remote-technologist model does have longer TAT and WTs than the all-expert-onsite model, the efficiency gains are substantial compared to the current workflow.

Discussion

Teleradiology is typically associated with the electronic transmission and processing of radiological images; however, advances in communication technology can support more efficient and reliable exchange of information in real time that is distinct from MR scanner technology. At the same time, healthcare is shifting toward a value-based care model. While the concept of value-based care is being defined and aligned, virtualizing MR image acquisition can increase value by optimizing workflow, improving staff efficiency and providing an opportunity for workforce development.

In this paper, the activities in an MR workflow were analyzed from the competency perspective of a technologist, and functions with increased cognitive demand were identified. These functions represent opportunities for improved efficiencies by utilizing experts to complete them. Using DES, two expert models were explored. Results show that by just offloading the functions of ‘checking unreported implants’ and ‘acquiring image’ to expert technologists, the average TAT per exam is reduced up to 12.54 minutes and the average waiting time is reduced up to 6.44 minutes when compared to the traditional MR workflow which includes the situation where expert help is provided on an informal basis.

Since these key functions do not require patient interaction, they are suitable for virtualization. The findings indicate that using experts in a remote capacity is feasible as efficiency is significantly improved. In addition, using experts in a virtual environment has other benefits:

- Increased utilization of scanners. Expert technologists would have the ability to support multiple imaging rooms which may include scanners/sites that are underutilized in rural areas, for example;

- Improved patient experience. Expert technologists are a shared asset in a network so patients may not need to travel to another location to receive access to high-quality care;

- Continued workforce development. Developing expert technologists provides a path to recognition, continues competency development, and increases job satisfaction;

- Increased care standardization. A remote center staffed with expert technologists enables the same high level of image quality, protocol, and diagnostic outcomes in a rural setting as well as an academic medical center.

The limitations of this work lie in what was not studied. While the anatomies, exam codes, technologist and MA functions, and workflow processes included in this work represent more than 80% of the MR imaging acquisition workload, there could be a bias that results from the exams not included. In addition, the study site is typical of large academic medical centers and may not reflect small radiology clinics or diagnostic imaging chains. However, the use of discrete event simulation as the underlying modeling mechanism provides generalizability for use in not only MR image acquisition but other healthcare delivery workflows where expert knowledge affects process times and where virtual operations may be considered.

Advancements made in virtual operations not only contribute to faster TATs and a better patient experience, but also help increase overall productivity of MR departments while providing more operational sustainability. A remote site composed of expert technologists warrants further work in the areas of assessing and characterizing technologist competency, balancing image acquisition tasks with cognitive load in a virtual environment to maintain expert resiliency and capacity and understanding the impact of task handoff between on-site and virtual locations on patient safety.

Acknowledgments

We would like to thank the MR technologists at UW Medical Center who provided invaluable help in the interviews and the collection of data for this research. We would also like to thank the reviewers for their work and insights.

Funding: This work was supported by the National Science Foundation (No. 1738265). The funding agreement ensured the authors’ independence in designing the study, interpreting the data, writing, and publishing the report.

Footnote

Provenance and Peer Review: This article was commissioned by the Guest Editors (Stephen J. O’Connor, Nancy Borkowski and Katherine A. Meese) for the series “Shaping Tomorrow’s Healthcare Systems: Key Stakeholders’ Expectations and Experiences” published in Journal of Hospital Management and Health Policy. The article has undergone external peer review.

Reporting Checklist: The authors have completed the SQUIRE 2.0 reporting checklist. Available at https://jhmhp.amegroups.com/article/view/10.21037/jhmhp-21-92/rc

Data Sharing Statement: Available at https://jhmhp.amegroups.com/article/view/10.21037/jhmhp-21-92/dss

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at https://jhmhp.amegroups.com/article/view/10.21037/jhmhp-21-92/coif). The series “Shaping Tomorrow’s Healthcare Systems: Key Stakeholders’ Expectations and Experiences” was commissioned by the editorial office without any funding or sponsorship. OS has several filed patents in the areas of virtual operations, workflow improvements, etc. The authors have no other conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Larson DB, Cypel YS, Forman HP, et al. A comprehensive portrait of teleradiology in radiology practices: results from the American College of Radiology's 1999 Survey. AJR Am J Roentgenol 2005;185:24-35. [Crossref] [PubMed]

- Hunter TB. Teleradiology: A Brief Overview. 2014 March 03 [cited 2020 Oct 02]. In: The Arizona Telemedicine Program Blog [Internet]. Tucson: University of Arizona Health Sciences [about 1 screen]. Available online: https://telemedicine.arizona.edu/blog/teleradiology-brief-overview

- Lakhoua MN, Khanchel F. Overview of the methods of modeling and analyzing for the medical framework. Sci Res Essays 2011;6:3942-8. [Crossref]

- Militello LG, Hutton RJ, Pliske RM, et al. Applied cognitive task analysis (ACTA) methodology. Klein Associates Inc. Fairborn OH; 1997 Nov 1.

- Clark RE, Estes F. Cognitive task analysis for training. Int J Educ Res 1996;25:403-17. [Crossref]

- Shachak A, Hadas-Dayagi M, Ziv A, et al. Primary care physicians' use of an electronic medical record system: a cognitive task analysis. J Gen Intern Med 2009;24:341-8. [Crossref] [PubMed]

- Weinger MB, Slagle JM. Task and workload analysis of the clinical performance of anesthesia residents with different levels of experience. Anesthesiology 2001;95:A1145.

- Fone D, Hollinghurst S, Temple M, et al. Systematic review of the use and value of computer simulation modelling in population health and health care delivery. J Public Health Med 2003;25:325-35. [Crossref] [PubMed]

- Sciomachen AN, Tanfani EL, Testi AN. Simulation models for optimal schedules of operating theatres. IJ of Simulation 2005;6:26-34.

- Vissers JM, Adan IJ, Dellaert NP. Developing a platform for comparison of hospital admission systems: An illustration. Eur J Oper Res 2007;180:1290-301. [Crossref]

- Günal MM, Pidd M. Discrete event simulation for performance modelling in health care: a review of the literature. J Simul 2010;4:42-51. [Crossref]

- Akkerman R, Knip M. Reallocation of beds to reduce waiting time for cardiac surgery. Health Care Manag Sci 2004;7:119-26. [Crossref] [PubMed]

- Matloff N. Introduction to discrete-event simulation and the simpy language. Davis, CA. Dept of Computer Science. University of California at Davis. Retrieved on August 2020;2:1-33.

- Meurer A, Smith CP, Paprocki M, et al. SymPy: symbolic computing in Python. PeerJ Computer Science 2017;3:e103. [Crossref]

Cite this article as: Li L, Mastrangelo C, Briller N, Tesfaldet M, Starobinets O, Stapleton S. Prioritizing magnetic resonance (MR) radiology functions for virtual operations: a feasibility study. J Hosp Manag Health Policy 2022;6:21.